Every Mock Is a Bet Against Your Design

Engineers glamorously flock to using mocking frameworks as a solution to saving time. In reality it is a band-aid provided by framework authors, baiting teams to ignore their subpar design decisions.

We have decades-worth of mission-critical legacy code.

There’s no testsI wish we had more

The original team left.

We need to move quickly

Writing tests in our code base is messy.

We keep seing bugs and risky roadmap promises

We’re about to try [unicorn AI SaSS for testing automation with LLM codegen]

… but I’m not sure. Can you help us, maybe mocks with a sprikle of DDD?

That’s a recurrent conversation bit with most of my coaching clients.

Lack of tests is painful. Full stop. Mocking framework vendors and QA automation scams are lining up to sell you bandaids and quick fixes. But they don’t have your best interests in mind. Your best alternative is figuring out what makes your code difficult to test in the first placespoiler: it’s design.

1. The Problem with Mocks

Mocks come with a weird paradox, enabling a shortcut to avoid real design:

They’re a supercharged swiss-army-knife in usefulness and ingenuity when it comes to code generation

Using too many mocks turns your code sticky and tests that needy many mocks come to bite you sooner or later, preventing refactoring or worse

This creates a moving target of financial trade offs:

Mocks create a shortcut to shoot up testability in an area hard to isolate

The mocked implementation becomes another piece of code requiring maintenance, making ongoing work in that area more expensive

The decision to refactor around mocks is harder because the engineers have to make a calculated re-investment decision to throw the old mock away and create new ones.

Potentially, this cycle of maintaining mocks (or avoiding refactoring alltogether) may be a loop lasting the codebase’s entire lifetime

2. Design » Testability » MocksMaybe

If you are new, welcome to 🔮 Crafting Tech Teams. I publish to you every week on how to become a better engineering leader. As a subscriber you will receive these posts straight to your favorite inbox.

Free subscribers get access to about half of each paid post and the free content related to the book club and 🏔 Our Tech Journey.

Whenever you’d like to become a paid subscriber you will receive full access to all content including early previews of books and coaching I offer.

We test for one reason: To increase the throughput of our product organization to delight customers, generating revenue.

In that sense it would be foolish to write a test for constraints that a compiler or linter can check for you.

Similarly, it would be foolish not to write a test for a critical functionality your team will end up testing manually.

But not all behaviors are easily testable. What makes some code more difficult to test is its design.

TDD is not the problem; Failing to Design Is

Code that is designed to be hard-to-test lacks in testability. Better design will improve the testability of the code. Testability is what allows you to write good unit tests (GUTs) for a minimal investment.

The inverse is NOT true. Tests don’t improve testability on their own. Testability also doesn’t magically improve your design. This is the usual TDD counter-argument of the design magically appearing if you follow TDD to the extreme “the design will emerge.”

Nonsense! just because you’re following Michael B. Jordan’s diet doesn’t mean you’ll end up being a top NBA player if you keep mimicking his routine.

It is far more likely that there is a skill ceiling beyond which you don’t know how to make a complex behavior testable or how to design the thing in the first place due to its essential complexity (e.g. a trading algorithm, or insurance policy across multiple industries, …).

Improve Design, Test Simply, Mocks = Last Resort

Mocks enable a shortcut to increase testability (temporarily) in one area of your code. At the cost of making the surrounding area more expensive to maintain (ie. degrading its design). They act like a stapler, but they don’t heal the wound on their own. But the long-term solution is education. Learning. Design better.

Learn how to design software so it retains testability

Combine testability and understanding of your domain to write good-unit-tests

Gather feedback on your design decisions to learn which bets where wrong

Refactor when you know you made a mistake, using your GUTs for safety

The counter-part to testing new features and gathering feedback is what to do with the legacy code:

Improve design to make testing and adding new functionality easier

Use test doubles when it makes the design better and the test more practical

Use mocks when (re-)design is uneconomical and testability mission-critical

Remove the tests and start from scratch when the debt on the mocks is bigger than the cost of rewrite

3. Extract Stubs from Production Code

Testing sounds like something you do after the real work is done. A chore with a list of checkboxes to inspect. This is where the London vs. Chicago element comes in. Of course you will need some test doubles:

❗️low risk Dummy: Makes test compile, not involved in test

❗️❗️Fake: A shortcut collaborator, helping test the SUT

❗️❗️❗️Stub: A static-value collaborator, use to narrow down a path to be tested

❗️❗️❗️❗️Spy: A stub that also records how it was used

❗️❗️❗️❗️❗️Mock: All of the above (Dynamic Values, Static Values, Records, Fake)

Mocks are the last resort. The best middle ground for producing test doubles is to extract the stable parts of your functions and objects that are naturally going to executed for the SUT, but requires separation or absence of harmful side effects.

Chicago → Fakes + Interfaces → State

Real functions for fakes and stubs, omitting sticky implementations

Core domain logic (the thing defining your business workflows)

Infrastructure concerns are considered details, simulated by a small amount of mocks or pure state inputs, reminiscent of functional programming.

Prefers assertions on return values or changes of output state, (ie. outgoing parameters, when that state is mutable).

London → Mocks → Expected calls

Tests interactions between objects, hinted by message passing

Mocks are used generously to isolate collaborators, often for interfaces that do not have implementations (see: XP walking skeleton)

Uses message passing rules: "It is correct for X to have been called with Y, and incorrect for it to be omitted."

Rather than inputs of state, collaborator functions and classes are passed for async or complex behavior that has to be faked.

But: Tests are more brittle. Each mocked collaborator couples the test to the interface of the mock, as well as the shape of the behavior under test (usually a class or function).

Test for Confidence, not isolated test coverage

Engineers get stuck when they consider tests as defensive programming or gatekeeping engineering hygiene instead of thinking of testing as a steering mechanism. The test doubles are there to help control which behavior path you want a proof of correctness for, not a full substitute that simulates the system.

The notion that unit testing and TDD require a strict level of isolation is neither true, nor helpful for building good software, nor writing GUTs. You can observe engineers’ pursuit of this isolation from “the ugly bits of the real world” in order to get a memory-friendly test harness. But the real world is our focus!

Isolation is helpful only to separate the system-under-test’s (SUT) behaviors and collaborators from behaviors not currently being tested.

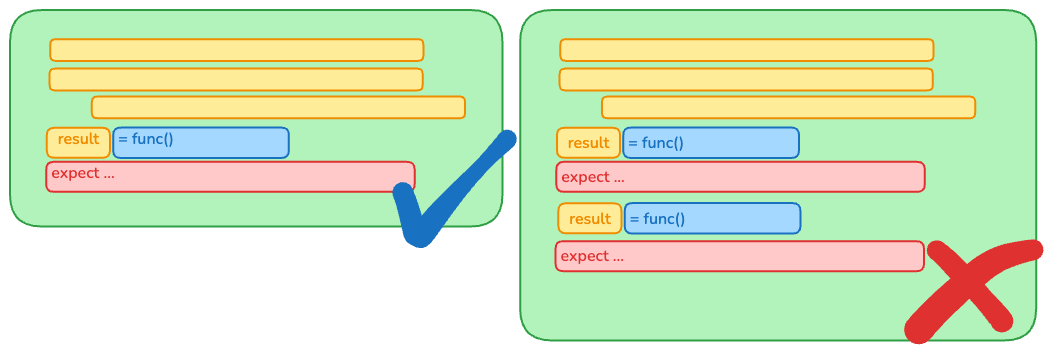

4. Keep your Tests Simple

It’s easy to tell code smells, design issues and a certain level of attention to detail merely by observing the shape of code. Elegance has a place in software as long as most of our problems come from the experience reading code, modifying it or the complex dance of discussing these things. Code smells also occur in test code and they are easy to spot.

A good unit test would look like this, regardless whether xUnit, mocha, jest, or google_TEST:

If you can’t fit a single test on a screen, it’s a strong signal there’s an underlying design issue of the production code. Likely the only solutions will be either refactoring it without tests to reign it in or choose a more risky testing strategy (e2e, snapshot testing). We won’t go into detail about why those two are riskier in this article, but happy to have a conversation about it. Shoot me a DM.

Testability starts with how you structure the code, not with the tests themselves. This is the heart of design.

“If your mocking is complex it’s telling you that your design sucks.“ —Dave Farley, Modern Software Engineering

Most of my conversation with never-testers is exploring their appetite and time sensitivity with regards to extracting collaborators and refactoring without tests, rather than test-writing or TDD directly with an over-use of mocks.

5. Plan = set limits + avoid exploration

With these knobs I recommend you break your value stream in the context of testing:

Just-in-time Design: Keep design and reversible architecture to a minimum until we have proven that the software is worth building. This enables system behaviors to be cheap to refactor while we’re testing for correctness and value.

(Acceptance-)TDD: Create a scaffold that prevents us from building implementations, design and architecture for anything other than the desired behavior. TDD further helps keep the scoping burden on the developer, rather than on whoever is administrating failed attempts, usually QA/non-engineering product.

Demand-first, incremental delivery: Enable quick feedback on costs (are changes expensive?) and efficacy (does the value stream still value it?) while rolling out the changes at the desired level of quality.

With the above rules in mind Mocks maximise locality in the testing and software development process, at the cost of delays to the overall value stream and more expensive future changes. So for mocks to be worth it, we want to:

Minimise how many we have, avoiding them ideally

Maximise how much value each provides

This exercise is worth running for your own situation as I’m sure it will differ in details that are important to you. How is your breakdown different?

Example: Chicago, with stubs

Example: London, with mocks

… that doesn’t look like our code at all!

I’m sure you’ll notice that the Chicago style stands out as being more natural, at least in typescript where the interface-implementation can be implicit and inline. Had I shown you an example in Java or C# the amount of code different would have been more drastic (the mock is shorter), and that’s naturally where most beginners get mislead.

6. Simple Rules to Follow

Only 1 Assertion Per Test

Tests are most useful when they guide you towards the issue when there’s something wrong

The prize isn’t in “TDDing the production code”, the prize is self-testing code that can have a “test run” for critical behavior

If your tests have multiple assertions, and it cannot be written with only one, that is a design improvement opportunity

Mocks Replace the Assertion → Only 1 Per Test

Mocks replace outside-facing communication (message passing)

You can mix mocks with stubs and fakes, but the mock is replacing an assertion, not another double

Highlight that tests with mocks don’t have an assertion

Don’t Mock What You Don’t Understand

Stop mocking libraries. Mock your own abstractions.

Bad:

jest.mock('mongoose');Good:

const productRepository = InMemoryRepository.for(...).with(...);If your test knows you're using GraphQL, Prisma, or RabbitMQ, your system is bleeding boundaries. Tests should express what you want, not how you fetch it.

🔐 Bonus: Not relevant to your Production Code? Play around with my ChatGPT Prompt

If you like to learn new concepts code-first, there’s code examples at the end of the article along with the prompts I used to generate them. Use the prompt if you want examples in your preferred language and domain!

Keep reading with a 7-day free trial

Subscribe to 🔮 Crafting Tech Teams to keep reading this post and get 7 days of free access to the full post archives.