First of all welcome to the wave of new subscribers this week! Happy to have you around. If you’re new, these are the main upcoming highlights:

Starting a Free book club reading Implementing Lean Software Development next week. DM me LEAN to joinI host live streams every Thursday at 16:30 CEST. Current theme is DDD, this week is about Aggregates and modern patterns. Come update your DDD knowledge

Today’s Crafting Tech Teams article is about interpersonal risks and motivation that an overwhelmed team faces. Often this is the case with technical debt and lacking practices. That will be our topic of exploration today.

Lead as a don’t knower

The main theme underpinning the overwhelm is cognitive load. It’s hard enough coming up with pragmatic, novel ways of engineering a product that’s fresh. It’s much harder to do so on a product that is built on top of a legacy system.

Each member of your team will generate somewhere between 50000 and 70000 ideas in a single day. That’s about 20000 at work. They are setting themselves up for a bleak future and they need your help, if they constantly think about:

The CI/CD pipeline being slow

Having no idea how the systems work

Triaging 200 bugs

Thinking about how all the unknowns will affect the sprint and roadmap

Spending their idea budget on reasons, excuses, analysis and tough conversations

Most engineers in such a situation will get overwhelmed. It’s natural. It’s human. They think they need to know all answers. But here’s the deal: no one knows the best way. But it can be difficult to default to that style communication in a tightly run ship. Rather than asking them to speak up more, be a don’t knower leader. Regardless of whether you are the manager, architect, senior or just the newest person on the team: model the behavior of saying “I don’t know.”

This will reopen the doors to conversations such as, “I don’t know…”

… but this is what I’m going to do.

… but I can find out if you help me reassign some of my work.

… but is this important now? By when do we need to make a decision?

Cut the flow: Address new additions before tackling the old

I wrote on this recently on linkedin to positive response—and sympathy for those who walk the talk.

There’s input and then there’s output. The best way to close this loop is to have tests cover a tight, positive feedback loop.

co-wrote a masterful piece with this week on these feedback loops in response to a controversial productivity study by McKinsey. I’m sure you’ve seen it.“But Denis, our legacy codebase is not testable!”

This is normal, but I feel your pain. It’s not the ideal starting point, but it’s the hand you were dealt. Now let’s play poker.

Our objective is singular: Build the team’s confidence. We achieve that by minimising the fear and anxiety involved with touching the legacy code.

It is the team's capability of agreeing on design that determines ease of testing and refactoring. And it starts with new additions to the codebase. Ensure that positive incentives are in place to make the new features cheaper to test and refactor than the existing ones.

This also means you will want to refactor certain—small—areas of the legacy codebase that you are touching. More on that later.

Don't overwhelm your team with katas and workshops. Focus on what’s in front of you:

Pick a piece of code along your critical path with an ensemble or pair

Set the stage and intent: We are improving the design / We are making this cheaper to change / We are making the next feature easier and faster to deliver

Identify a major code smells or WTF?/m point

Have the pair look up the refactoring path for that smell.

Now this is important: Fix just that. No scrolling around, no fixing indentations or typo. Just the smell you are working on!

Keep the PR small: <50 LOC. Review, merge, reflect on what you learned.

Regarding testing, you have three options:

Easy: Rely on prior unit and acceptance tests

Your tests should not fail due to the refactoring.

If they did, it's time to remove or update them.

Medium: Rely on acceptance tests only

Make the small step PR only and then run the entire battery. Yes, it's weird to run the entire QA suite for 50 lines of code. It's supposed to be annoying. That annoyance incentivises you to write unit tests.

Once you know whether the code is covered or not and whether it works, test-cover it with unit tests. It won't be easy. You'll make mistakes. You'll mistakenly test implementation instead of behavior.

At this stage do not use automated tests to determine production-readiness. Use them as a safety net to continue refactoring the mess.

Your company: You didn't have any tests.

This is the trickiest scenario as you can get overwhelmed easily. If the codebase is a coupled mess, you likely won't be able to test without setting up a giant amount of DB, IO and 3rd-party service stubs, doubles and mocks.

Approach this situation as you would fixing a water leak:

Turn the water OFF: refactor in a direction that separates Input/Output from compute. Keep the data local and close to the compute. The compute are your methods. The data are what's in your memory. How you fetch from your repositories that’s the I/O.

If this is along your critical path, congratulations: you have to make a difficult decision.

Will you go hexagonal and isolate?

Are you adopting pure OO with the help of DDD?

Strangler fig?

Anti-corruption layer (DDD)?

Every company in our industry has to deal with such tradeoffs every day. I coach them regularly. Rest assured all these problems are mapped. Each code and architecture smell has a refactoring that solves it. You just have to practice them. Let’s look at a few examples.

Anti-corruption layers

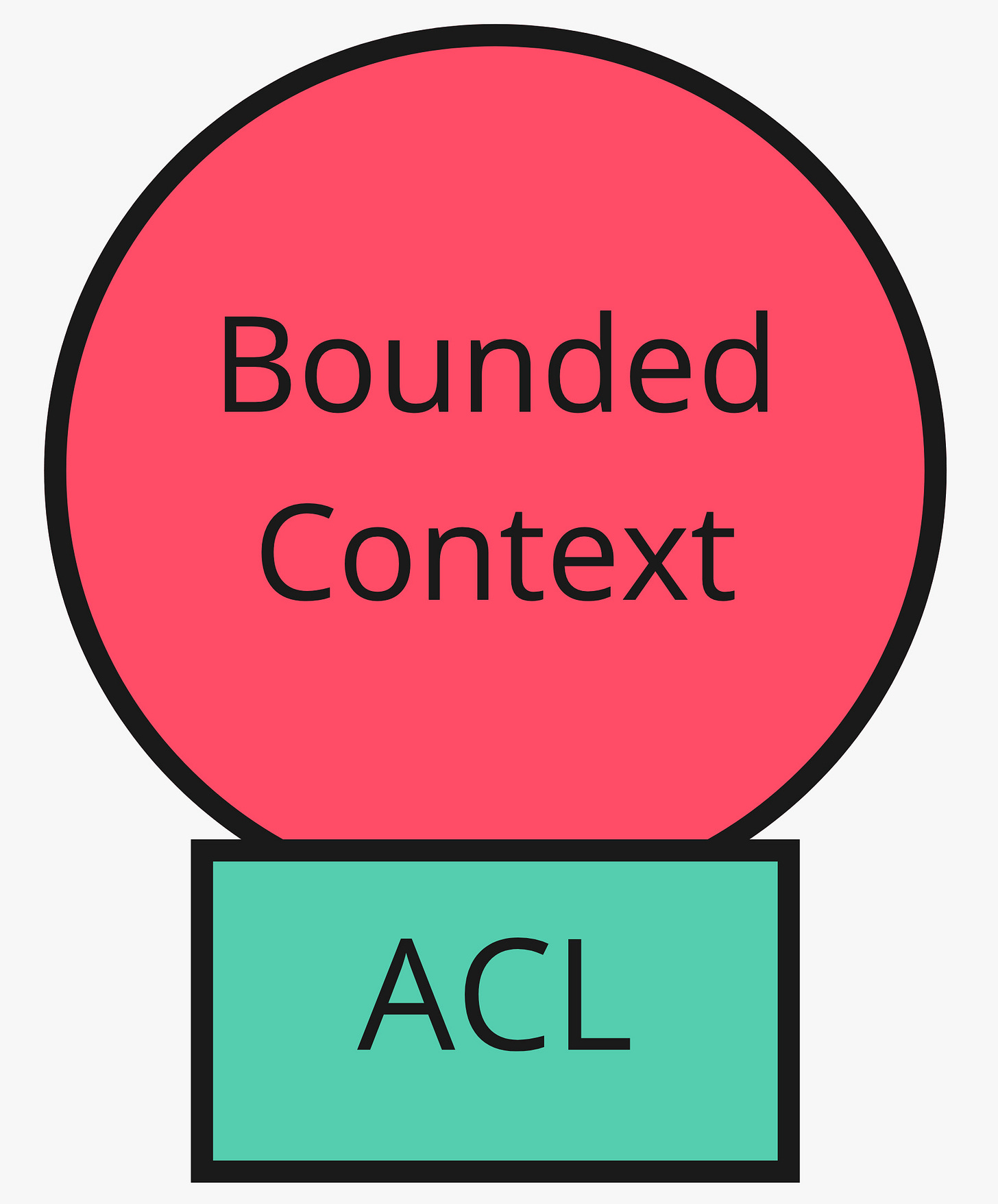

In Domain-Driven Design we recognise the need to protect a new design—a new initiative—from the corruption that may be introduced by bad habits from a legacy service.

"As a downstream client, create an isolating layer to provide your system with functionality of the upstream system in terms of your own domain model. This layer talks to the other system through its existing interface, requiring little or no modification to the other system. Internally, the layer translates in one or both directions as necessary between the two models." (Source: DDD Reference by Eric Evans)

Strangler fig pattern

From Martin Fowler’s legacy rehab, the strangler fig pattern is a specialised form of anti-corruption layers. This option is best when you have a combination of new context and old, legacy systems that you want to move on from rather than upgrade.

This works very well in co-hosted systems like PHP, Ruby and Python where you want to leverage upgrading the stack as an up-front decision without having to rewrite the old codebase first.

More in the article below 👇

Optimise for a Strong Legacy, not Friction

Make your Legacy Code be a Monument, not a Museum There are relics from ancient times strewn about Europe that have been turned into Museums. Picturesque relics of an unknown complexity where the details vanished and the only thing that survived the test of time were the

Work on fundamental skills

Fast feedback

Small changes

Stable at any interruption

A productive team is a low standard. An ideal team is safe to make mistakes and leverage their productivity for known, transparent product engineering goals.

These are the hallmarks of modern software engineering. Whether you are using continuous delivery, integration, merging or git flow. It is in your team’s best interest to keep changes small so that you can check for upstream feedback more frequently.

When team’s invite me to help them improve their delivery speed we usually follow a common route along their fundamentals:

Self-diagnosis. We wanted to learn X (X may be TDD, DDD, EM, etc.)

No time to apply the teachings. I shadow and survey them.

Huge opportunity to improve support / product triage. This is what kept them “busy”, but not productive

They want more tactical tips on negotiation and estimation. The cat is out of the bag, they are starting to build confidence

Overpromise, technical debt kicks in. This is the crucial intersection. Some teams go back to 1. But some push through and start working on smaller pieces.

PRs become smaller. Refactoring kicks.

Realise they need help identifying code smells and with OO design. We have come to the original self-diagnosis, but it took a long time to address the root cause issues and their symptoms.

💬 Join the discussion: Live with John Gallagher

This week: Tactical DDD Patterns

We’re going to get our hands dirty on this week’s stream. Looking into common traps that disrupt adoption of DDD patterns, exploring nuance on aggregates the influence it may have on domain events and testing. Call for feedback - How are you liking the chat participation on Linkedin? Is the Event for each week easy to find? Do you get value from the

Mark your calendar tomorrow (Thu 31st, 2023) at 16:30 CEST.

“You'll mistakenly test implementation instead of behavior.” I have to be a don’t knower here - could you explain what this implementation vs behavior distinction is? I have heard you mention both of these terms often in your streams, and I just get confused every time I hear the word behavior.