Master The Business Benefits Behind Dependency Injection

You thought it was about pretty configs and constructors?

In today’s Crafting Tech Teams issue we are looking into the business benefits of dependency injection. This isn’t a basic tutorial. The topics below are useful for tech leads and managers who want to understand the business impact of this practice and principle.

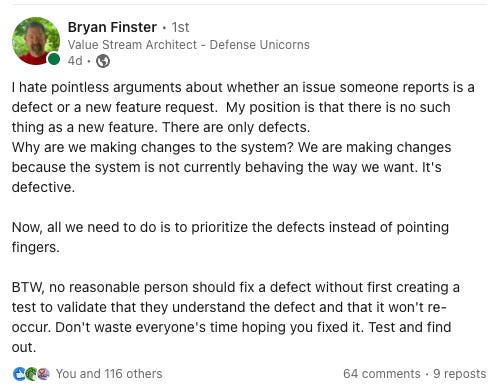

The business cycle: Imagine. Test. Change. Chaotic teams focus on cash flow, not profit. This drives them out of money and manoeuvring space very quickly.

Injection enables composition. Composition enables testing. Testing enables change. The recipe to a happy team. Cleanup and tidying. 10 seconds now saves you 10 minutes later. 10 minutes now saves someone else 10 hours later.

Test Implementations are part of Production Code. Tests determine productivity and efficacy. Efficiency comes from writing tests well. “Going fast” by skipping them leads to the cash flow vs. asset profit problem (see point 1)

Successful businesses change. A lot. You think you can afford to do it later? Later there will be a lot more things to do. Later has a shrinking time availability. Refactoring now frees up some of it and makes it available for future agility.

Unsuccessful businesses struggle with maintenance and change. A lot. Nothing worse than a team who is afraid to make changes to their code. Especially when the business can no longer track which features are being used.

💬 Join the discussion - Injection & Clean Architecture Stream on Thursday. Got questions? Want to see me react to Dependency Injection videos? Are you on a team using Clean Architecture and are wondering how to best inject frameworks and ORM concerns?

The business cycle: Imagine. Test. Change.

No, I’m not talking about TDD. Though I do recommend the practice if you’re interested. The concept of change captures two types of changes: change in business intent and refactoring.

Business intent is the requested change imagined by stakeholders. You may notice I make no distinction between bugs and new features. They are the same.

In essence, the first high-level test written for any request is the story, spec or work task. “We want this thing and it’s supported by the application yet.” This is a documented decision. You can tell it is missing, even though it’s written in text, rather than a coded test.

Author’s note: By the way, formalisation of features in this manner is the domain of Behavior-Driven Development.

When the team agrees it is missing, you can start work. The process of this work is change: refactoring and adding new functionality. The majority of refactorings are extracting composable pieces from a big pile and compartmentalising.

It is sort of like decluttering a messy kitchen and putting everything in the right drawer.

The creation-inverse of extraction is inlining: I will keep a mixed drawer, rather than several sorted ones.

The usage-inverse of extraction is injection: I need to get 4 items from 4 drawers and put them all on the counter.

Injection of dependencies is not to be confused with the SOLID principle: dependency inversion.

In short:

Inversion is the principle of ordering dependency hierarchies and direction

Injection is the transport mechanism of passing instance references around

There’s a prior article on that entire topic below.

Make Dependency Injection your Superpower

Injection enables composition. Composition enables testing. Testing enables change.

The driving factors for change management are composability and injection. They enables teams to compose complex applications by providing a mechanism for loosely coupling components and classes.

Engineering teams spend most of their time in modes of reading and understanding. Coding and deploying are relatively minor distractions. There are two mutually accelerating components to an engineer’s level of understanding:

Understanding the Business Content

Understanding how to change the current software

When the second point is trivial, we call such a project a Greenfield project. Usually there is no prior code present other than boilerplate. However, in real systems the amount of legacy software outweighs every increment of change.

Managing the different injection configurations is the backbone of complex application, especially enterprise scale.

Business Context

Why should a founder or non-technical stakeholder care about this? Because injection by composition accelerates the rate at which change happens by reducing the scope of the change.

This is the equivalent of commoditising small pieces of software that aren’t individually user-facing. Like a prep kitchen preparing buns and onions for a burger restaurant. Imagine if each customer had to wait for their bun to be leavened, shaped, baked and cooled before they got their burger. They’d wait days rather than minutes!

And that waiting is what happens in software businesses that don’t do enough prep-work—refactoring. Software in such teams is not modular, strongly coupled and is difficult to inject into and compose. That is the primary cruft that besets teams who manage legacy software with sub-standard processes. You may check out a few use cases here:

Technical Debt - Martin Fowler

Measuring developer productivity:

Test Implementations are part of Production Code.

I notice a particular type of head-scratching that happens when coaching teams, especially during TDD workshops. This happens frequently in hexagonal architecture meetings as well:

“Why should I ever want to replace my actual implementation with in-memory fakes?“

The answer is simple: Testing against a tightly-controlled fake optimises the run-time of tests for components that require I/O. This runtime is crucial for fast feedback loops. Remember: we want the burgers out in minutes, not days!

I often witness a peculiar discomfort gripping engineers when I suggest the idea to change their implementation in order to make tests run faster. This is caused by a production-vs-testing separation of code components. You have likely seen it often in folder structures: Tests are far away from what they are testing.

However, this is not a nice-to-have luxury. It is exactly the reliability and performance of the test suite that determines the rate of change in a software product. Do your tests requires hours to run and days of manual QA? Guess how long a simple change takes? A team lacking fast feedback will gradually dig themselves into an endless hole. Treating feedback as a scarce resource creates a negative feedback loop:

Our releases are slow

Because the tests take too long

So cram as many changes as possible into a testing cycle

Because testing in expensive

This cycle produces lots of negative feedback of things not working

It’s too late, the code is already coupled and glued, hard to change

We’d need to refactor to make it easier to change

Delay release, do it now: Next release will be faster

Rush release, do it later: Next release will be slower

Successful businesses change. A lot.

You iterate on an MVP. You build a team. The product is successful. Users come in so fast you can barely cope with scaling systems to keep everything afloat. Guess what the next order of business is?

Change.

You can’t escape it. There is no stability in our industry. In construction and civic engineering you can erect a building or bridge and maintain it once every 5 years.

Software is in constant flux.

1 month passes. You had an old feature branch laying around and want to merge it. But no one understands what it does. It has conflicts with your mainline source code.

3 months pass. A maintainer of an important subsystem leaves the team. A simple change that took minutes prior now takes a new developer weeks.

6 months pass. Even if you made no feature changes, your deployment artefacts use libraries that are now out-of-date.

12 months pass. No feature changes? Tough luck, you can no longer re-deploy because one of your frameworks is no longer available on a public repository.

24 months pass. You are certified for SOC2 and ISO/IEC 27001. You can no longer maintain the certificates because the components and frameworks used by the application you haven’t touched in 2 years are past end-of-life.

To keep up with all this, composability and refactoring must be part of the every-hour cycle of work. Not clean up sprints. Not refactoring hackathons. Not every week. Not every day. Every hour.

Avid readers of Crafting Tech Teams will notice a pattern. You can look up daily processes and refactoring advice on prior issues.

Unsuccessful businesses struggle with maintenance and change. A lot.

Business that rely on VC money to accelerate an initial push often become stagnant when their cash reserves start matching their cash flow. Especially during layoffs and company restructurings, a team’s ability to maintain legacy software can diminish. Remember the chapter of this post?

Brain drain on business understanding make subsequent software increments exponentially slower, regressing from minutes back to weeks. That is why tools like Sonarqube and Codescene measure it actively.

Unlike successful businesses, the struggling teams have the opposite problem to scale, maintenance and security: they need to remove features safely. Cutting unused product capability and saving on operational expenses is the main priority for a failing or pivoting startup.

Unfortunately, the fear of change and the lack of certainty when removing entire verticals in your code base can cause a lot of friction.

This entire process can be summarised as simplification. And guess what refactoring and injection provide as a positive side effect?

💬 Join the discussion - Injection & Clean Architecture Stream on Thursday

Mark your calendar! I stream every Thursday for an hour at 16:30 CEST. August 10th will be about Dependency Injection with Clean Architecture. Last stream we had 40 people joining live, keeping chat busy and discussing interesting aspects of clean architecture.

You don’t have to be on time, feel free to join late. Post your questions on LinkedIn or live during the stream.