Roadmaps don't create a product; Releases do

Phantom Streams of Unfinished Work

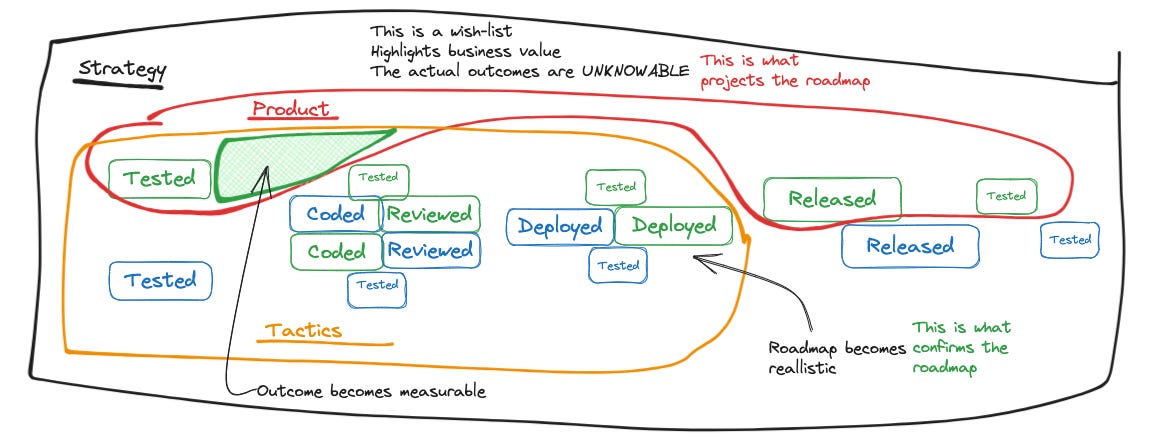

The source of most frustration in tech teams is being constantly judged by comparison to an unachievable roadmap. I have witnessed companies spend fortunes on creating perfect roadmaps. This is common.

I rarely see high-level roadmaps be based on a team’s actual ability to deliver. The disconnect is a bottomless wish list. The outcome is disgruntled product owner and a demotivated team.

This post explores why this happens and what you can do about it with your team.

Sin #1: Unknowable Unknowns, no re-planning

It is trivial to make simple plans when our knowledge of the problem is minimal. All built software has risky areas and unknowns. The most valuable unknowns are not knowable at the beginning of the project.

The real planning—and roadmapping— can only happen during the project.

Thus, the best time to re-plan is day one. That’s also the only moment to re-plan. If you wait too long it becomes another project.

Sin #2: Fake collaboration, fake estimates

There are two parts to estimates:

Forecasting completion of the solution

Communicating our understanding of the problem

I’ve seen these get mixed, combined or even ignored in all kinds of setups. The important take away for me is always this: You estimate understanding of business value—and forecast the execution process.

A caveat to point out to the reader: tests can fail. Deployments can be blocked or postponed. Reviews often end up in changes requested. CI pipelines can fail. It’s important to keep in mind the definition of done. This is all re-work.

For most for-profit business cases, done is released on production in a manner that is valuable to the end-user and allows revenue capture.

I believe I don’t have to explain to you what it means when you’re ready to deploy but no one cares whether it’s released or not.

The more pressure you put on the team to come up with ideal, fake estimates, the more pressure you’ll need to apply to actually get them to tell the truth during the project.

The execution context is not linear. There will be iterations. There will be re-work.

“Civilization advances by extending the number of important operations which we can perform without thinking of them” —Alfred North Whitehead, mathematician

Sin #3: Testing after

A good target to aim for with any task is to get acceptance criteria defined as soon as possible. Behavior, technical details, anything that strongly pinpoints valuable characteristics. In high-performing teams this is often done during the planning phase and in the earliest stage of coding.

Test-first or don't test at all

“I’ll do it later” I’ll do it later is one of the most common anti-thesis to improving tech engineering culture. Testing becomes exponentially more expensive the longer you wait. This has to do with how much re-work and re-understanding needs to be done after-the-fact if you don’t start with a

Slower teams that are used to batch deployments may defer this to QA testing or pre-production discovery. The timing is important: if your team defines these characteristics before starting implementation, they can derive important quality decisions from it to keep the solution simple.

Less experienced teams make the mistake of assuming quality can be inspected into an existing solution. This is especially precarious when the initial, rushed implementation is not very well testable— it will require re-work to have any meaningful testing automated.

Successful Models of Execution

I know you like diagrams so the good stuff is complemented with drawings by yours truly 😬

Key differences, in order of importance

Define unit and acceptance tests

Pair program to get immediate feedback— you may notice this also makes review unnecessary

Separate releases and deployments using shadow deploys and feature flags

Feedback from production—or if you prefer, staging—is provided by acceptance tests

Separate the Wish list from the Realistic

At point of planning all estimates are accurate. Meaning, they are not wrong, they are just estimates. They are not forecasts. A forecast becomes knowable and realistic once the team has shown some measure of progress.

This can be a 2-hour spike or a fully fledged out feature. Whatever it is, we may call it Iteration 1. This is often enough. Traditional project managers may call this an MVP or Prototype. It doesn’t have to be 3 months. Your team can get actionable

Don’t hope—define important parameters

You want zero bugs? Request a zero-bug quality check.

Do you want a smooth deploy? Practice deploying it.

Is coordination with a 3rd-party service crucial? Integrate it on day one and make the integration/acceptance tests visible to non-technical stakeholders.

The really important priorities should cost you. Invest into them early while it’s cheap.

great read. thanks for sharing

in an ideal world, how would it look to separate communicating understanding of the problem, and forecasting completion?